Bots

Ensure Your Bot Performs Flawlessly with Our Advanced Testing Interface

Stop worrying about inconsistent bot responses!

With Chat Thing’s cutting-edge testing interface, you can easily verify how your custom AI chatbots respond to specific questions, ensuring they deliver accurate and reliable answers every time. Our platform empowers you to create and run comprehensive test cases, fine-tuning your bot's behaviour, comparing different prompts, and optimising model settings for peak performance.

Why Testing Matters

Having a detailed set of test cases is crucial for any AI chatbot. Here’s how our testing interface can help you:

- Catch Errors Before They Reach Users: Identify and rectify issues before your bot interacts with real users, enhancing user experience.

- Improve Bot Accuracy and Reliability: Ensure your bot consistently delivers precise and relevant responses, building trust with your audience.

Just so you know

Our testing feature is only available to users on the Enterprise plan.

Design Test Cases to Cover All Aspects of Your Bot's Functionality

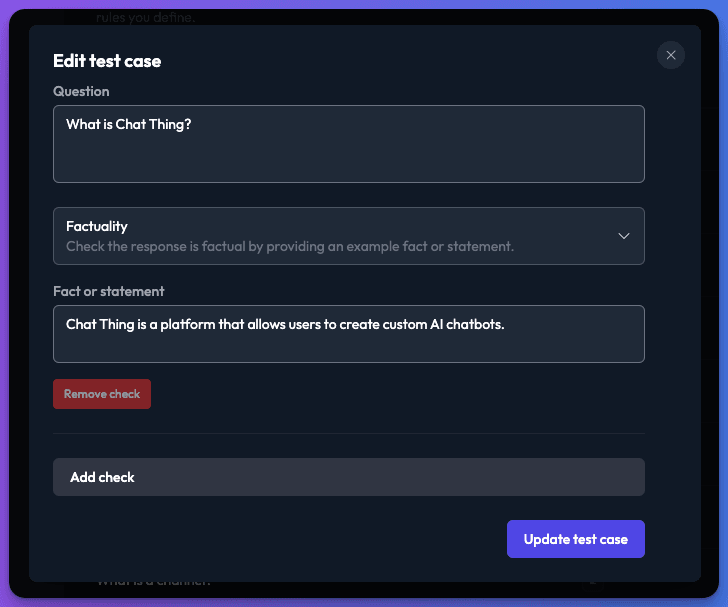

Our intuitive testing interface allows you to quickly build a comprehensive suite of test cases that thoroughly examine your bot's capabilities. Each test case includes a question and multiple checks performed against the bot's response, enabling you to verify factual accuracy, response similarity, and adherence to your defined rules.

Create Tailored Test Cases

For each bot, you can create a set of questions that we will pose during each test run. The bot's responses are then compared against a set of checks that you define.

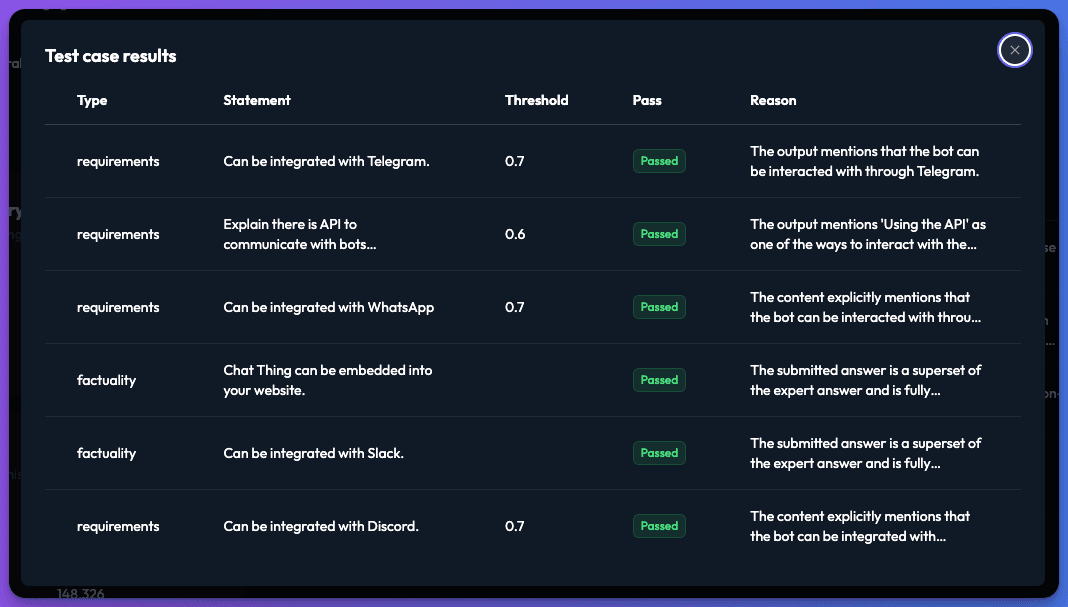

Available Checks

- Factuality - Ensure the response is factually correct by providing an example statement.

- Similarity - Measure how closely the bot's response matches an example answer.

- Requirements - Specify a list of criteria that the answer must fulfill.

- Relevance - Verify that the response is pertinent to the question asked.

About thresholds

For many of these checks you can provide a threshold. This gives your bot a little wiggle room in terms of how it responds 0.7 is a good starting point, but you may need to reduce this further depending on your bots configuration or the complexity of the check.

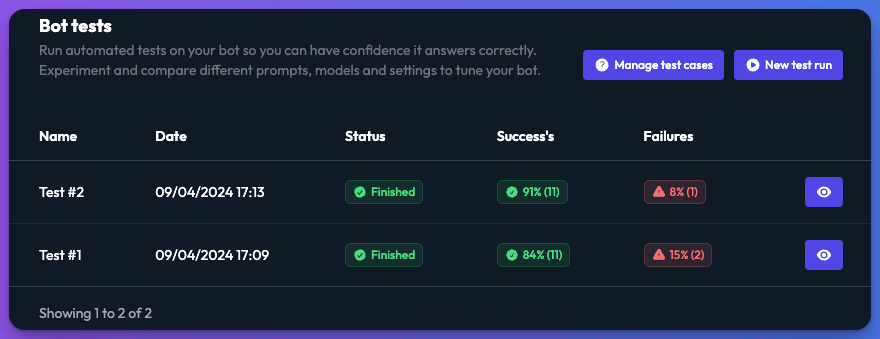

Monitor Test Runs Effectively

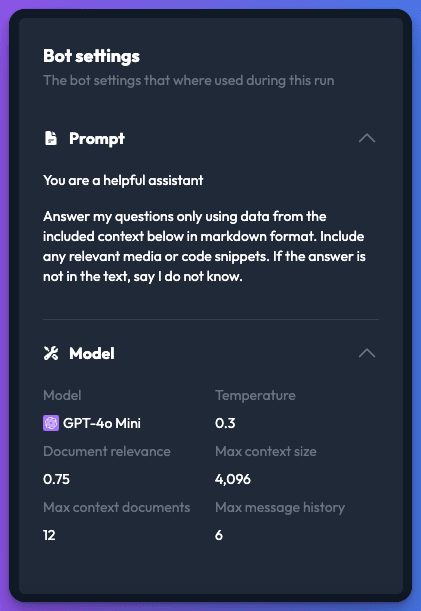

Each time you run your test cases, we capture a snapshot of the bot's configuration, allowing you to review the model and settings used during the test run.

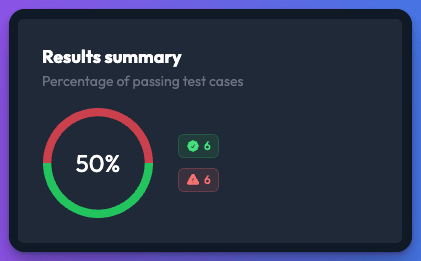

For each test run, you can see the number of checks that were successful or failed.

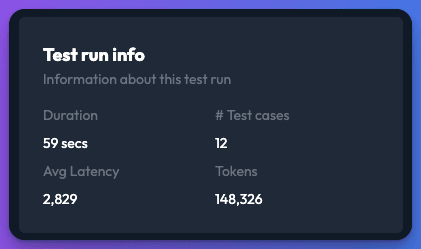

Additionally, you’ll receive key statistics such as the time taken to complete the tests, the average response time, and the number of tokens required to execute the test suite.

You can also view the responses to each test case check, helping you understand why a test may have failed.

Continuous Improvement

We hope this overview of our testing interface provides you with valuable insights into how you can ensure your bot performs flawlessly. We are committed to enhancing this feature in the coming months based on user feedback. If you have any questions or need assistance, please don’t hesitate to get in touch or join our Discord server.