Hello everyone!

We're thrilled to share some exciting updates with you that are sure to enhance your experience with Chat Thing!

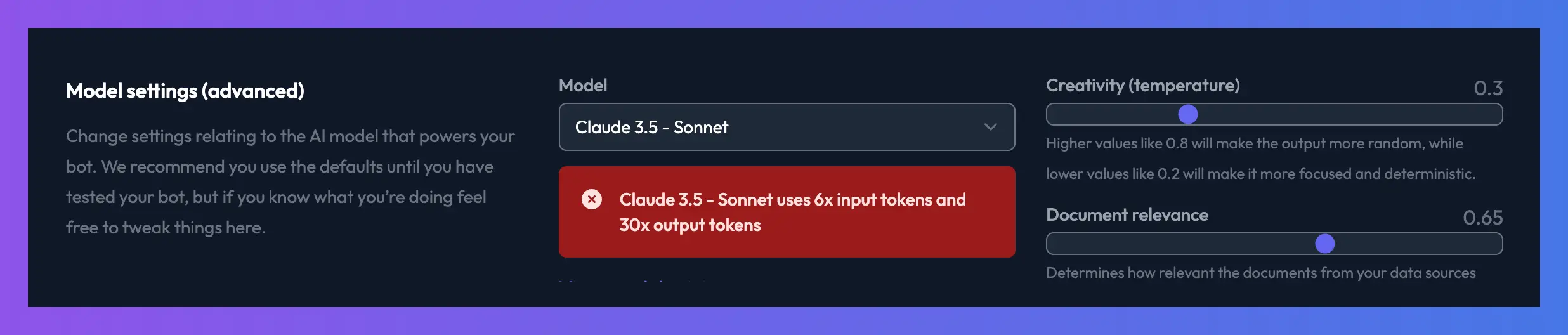

Build AI Chat Bots powered by Claude 3.5 Sonnet!

You can now choose Claude 3.5 Sonnet as the model to power your chat bots built with Chat Thing! This brings the total number of models supported by Chat Thing to an impressive 17!

Why should you use Claude 3.5 Sonnet?

It's been taking the AI scene by storm, with many agreeing that it outperforms GPT-4o at many tasks. It also works out slightly cheaper than GPT-4o in terms of input tokens, meaning your Chat Thing message tokens will go further.

Additionally, it has a gigantic 200k context window compared to GPT-4o's 128k. This means it has more room to hold your chat history, load more of your data sources into context, and greater potential to generate substantial content. While GPT-4o's 128k context window is sufficient for most tasks, it's beneficial to have the option of a larger context window when needed.

You can now select Claude 3.5 Sonnet from your bot's "General settings" within the "Model settings" section.

We'd love to hear your thoughts on this new model!

Enhanced knowledge retrieval

We've introduced a new option called "Enhanced knowledge retrieval" that should significantly improve your bot's responses when users ask vague follow-up questions.

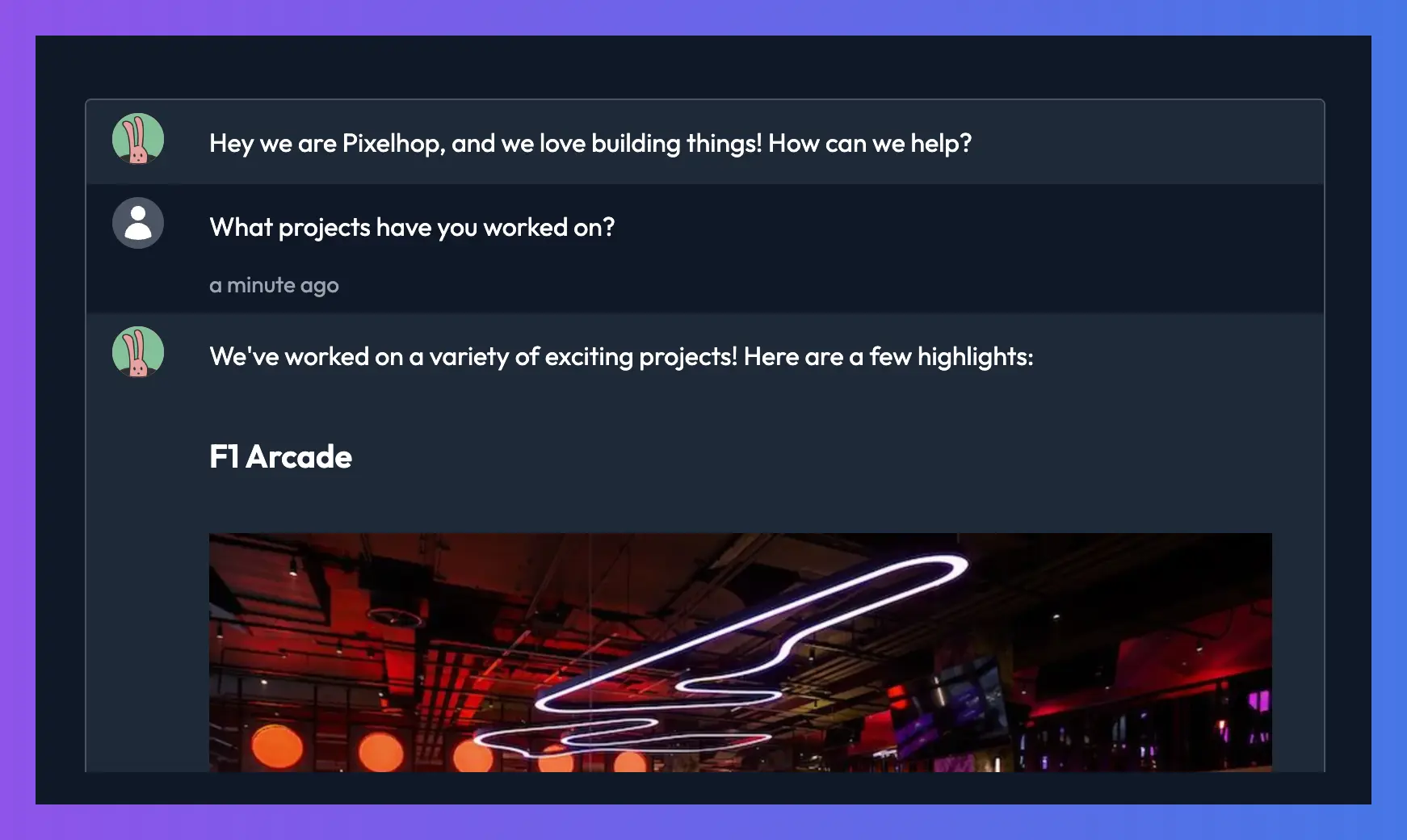

TLet's illustrate this with a brief example. Take a look at this conversation with the bot we use on our Digital Agency Site: Pixelhop:

The user asks, "What projects have you worked on?" and the bot responds with a list of our projects. That's great, and you can see beneath the response that we have several source links to pages from our site that helped build the response.

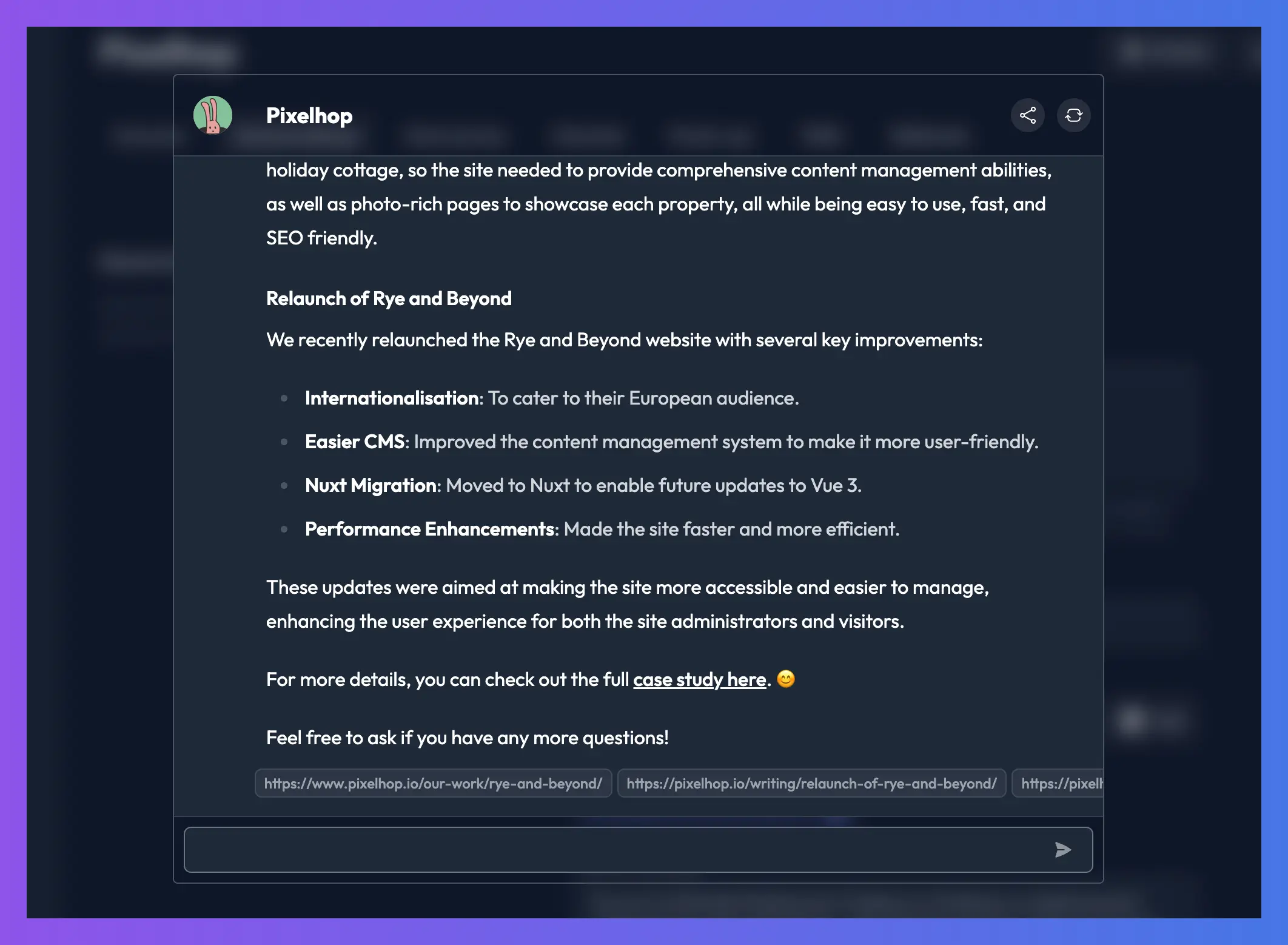

Next, the user asks, "Tell me more about the last one." The bot's response looks acceptable at first glance, but upon closer inspection, it contains several hallucinations and fabricated facts about the project. Interestingly, it has no sources under its response.

Why is this happening? We have an entire page dedicated to this project on the Pixelhop site, so the bot should have all the information it needs to answer correctly.

Prior to the enhanced knowledge retrieval feature, when your bot searched its data sources for relevant knowledge, it was only based on the last question. In this case, the question is extremely vague. The bot doesn't know which project the user is referring to, and since the question doesn't explicitly mention the project name, the data source search returns no items it deems relevant enough to the question.

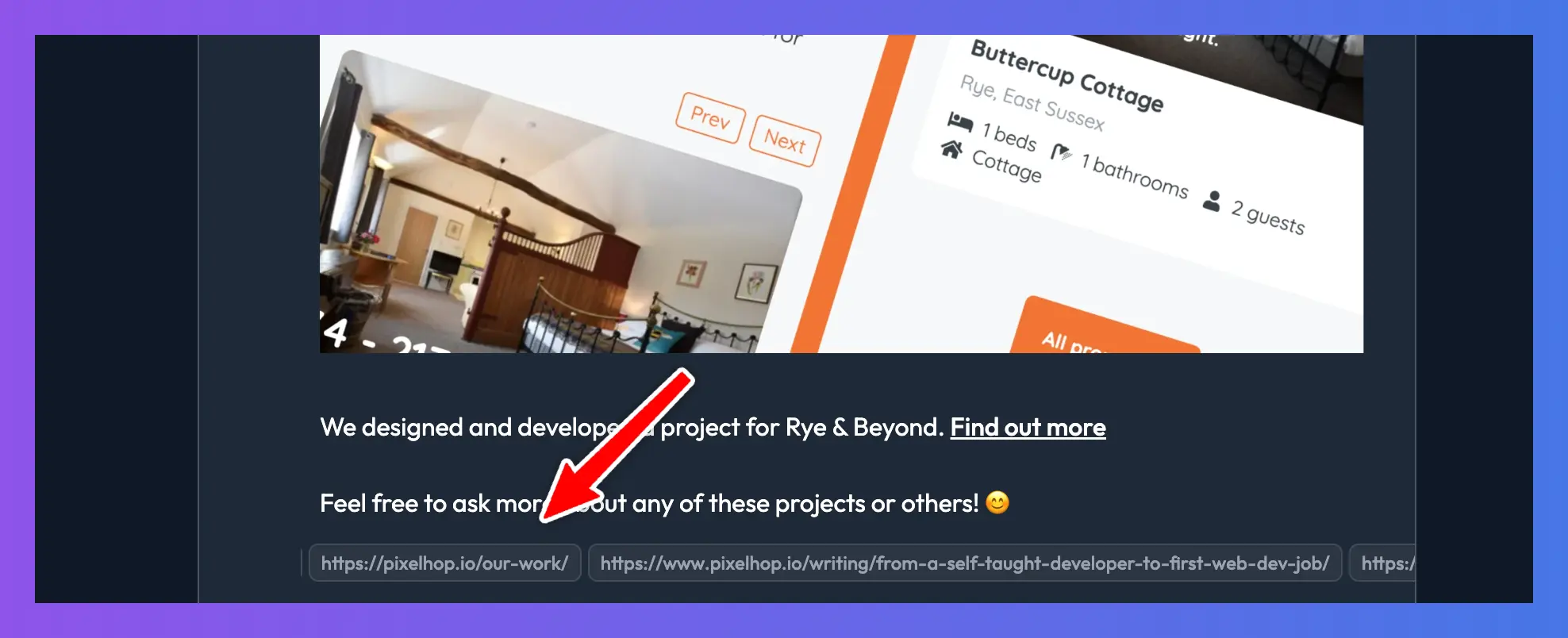

Enhanced knowledge retrieval addresses this by using AI to rewrite the search question with more context from the previous conversation. For example, the question "Tell me more about the last one" will be converted to "Tell me more about the Rye and Beyond project" before it's used to search the data sources. This results in much more relevant results and significantly reduces the risk of the bot making things up or hallucinating.

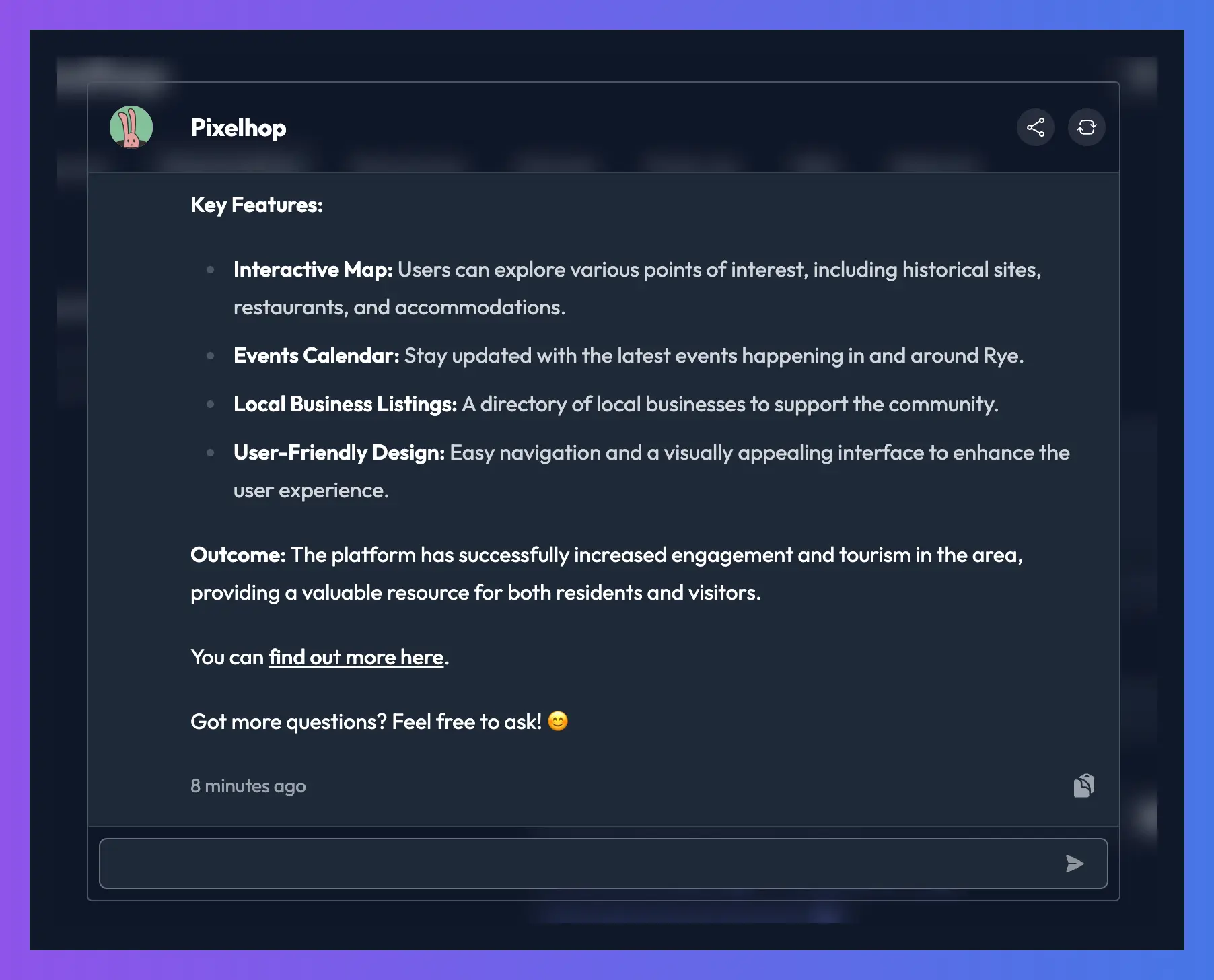

Observe how much better this answer is, with no hallucinations, because it's using the correct relevant knowledge from its data sources, as you can see under the answer:

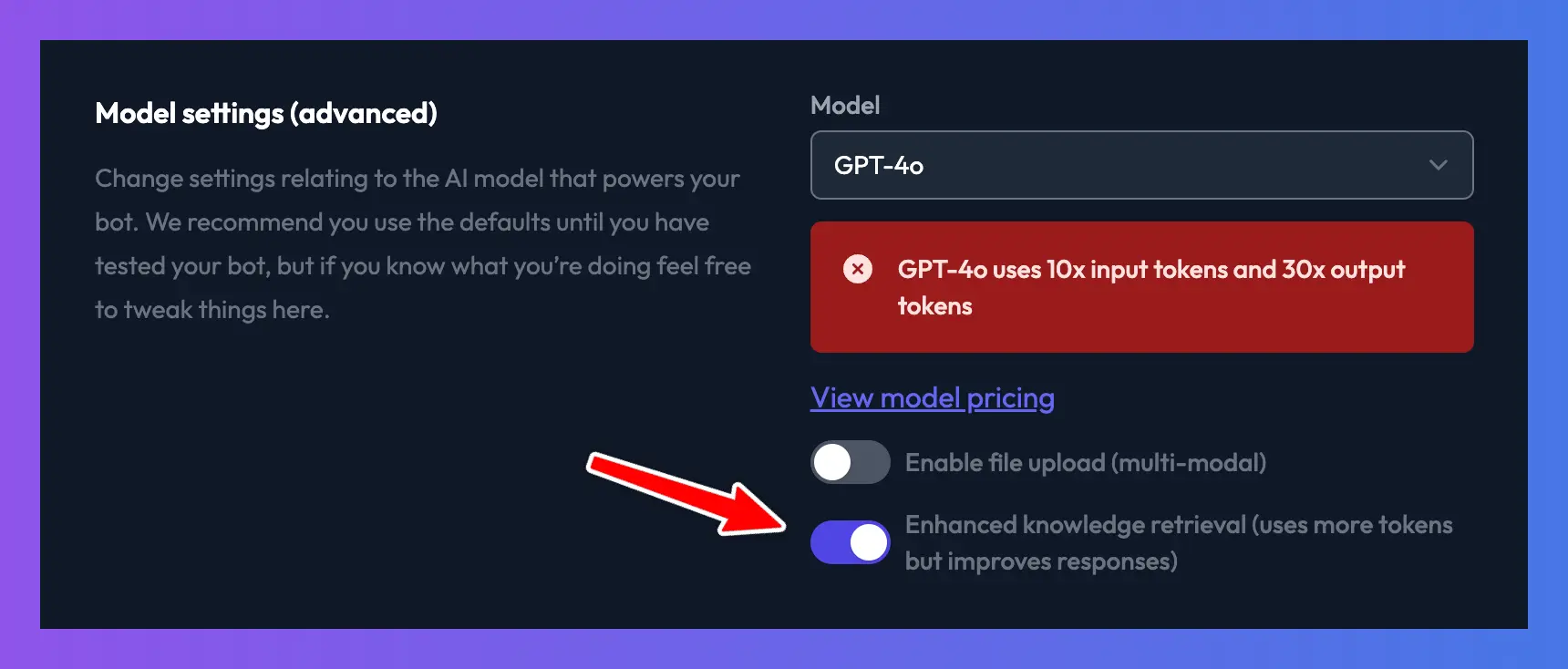

You can turn this setting on from your bot's "General settings" in the "Model settings" section.

Enabling this setting uses slightly more message tokens and may slow your bot's responses marginally, but we believe in most cases, this trade-off is worthwhile for better responses.

If the reaction to this feature is positive, we may consider enabling it by default in the future.

That's all for now

We're excited about these new features and how they can improve your Chat Thing experience. We encourage you to try them out and let us know your thoughts. Your feedback is invaluable in helping us continue to enhance our platform.

Have you tried these new features yet? We'd love to hear about your experience!